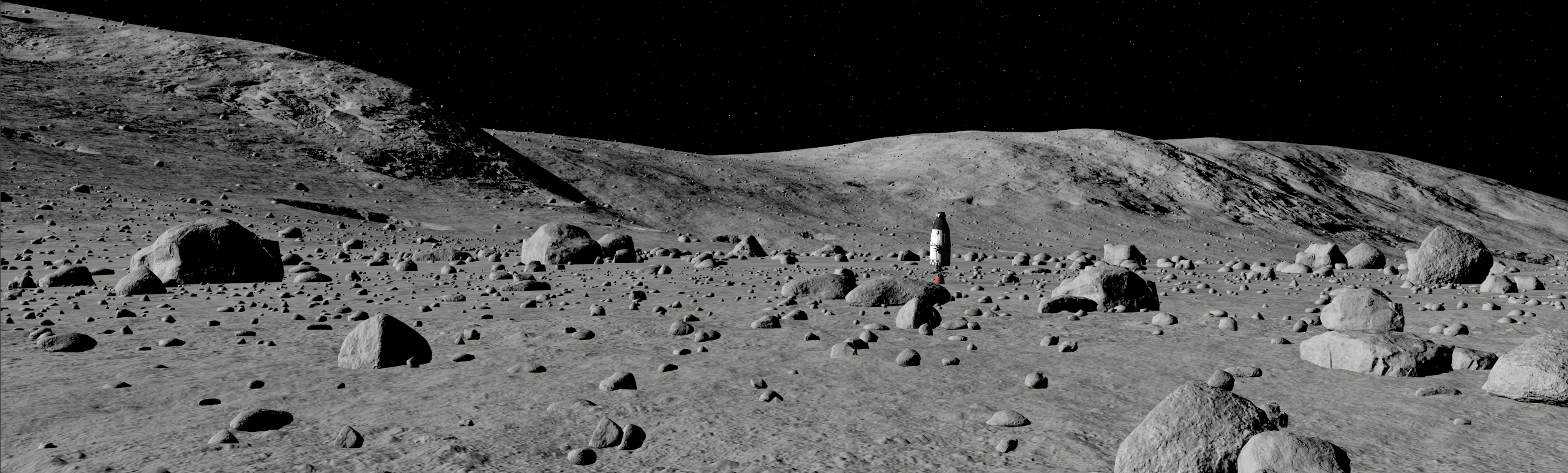

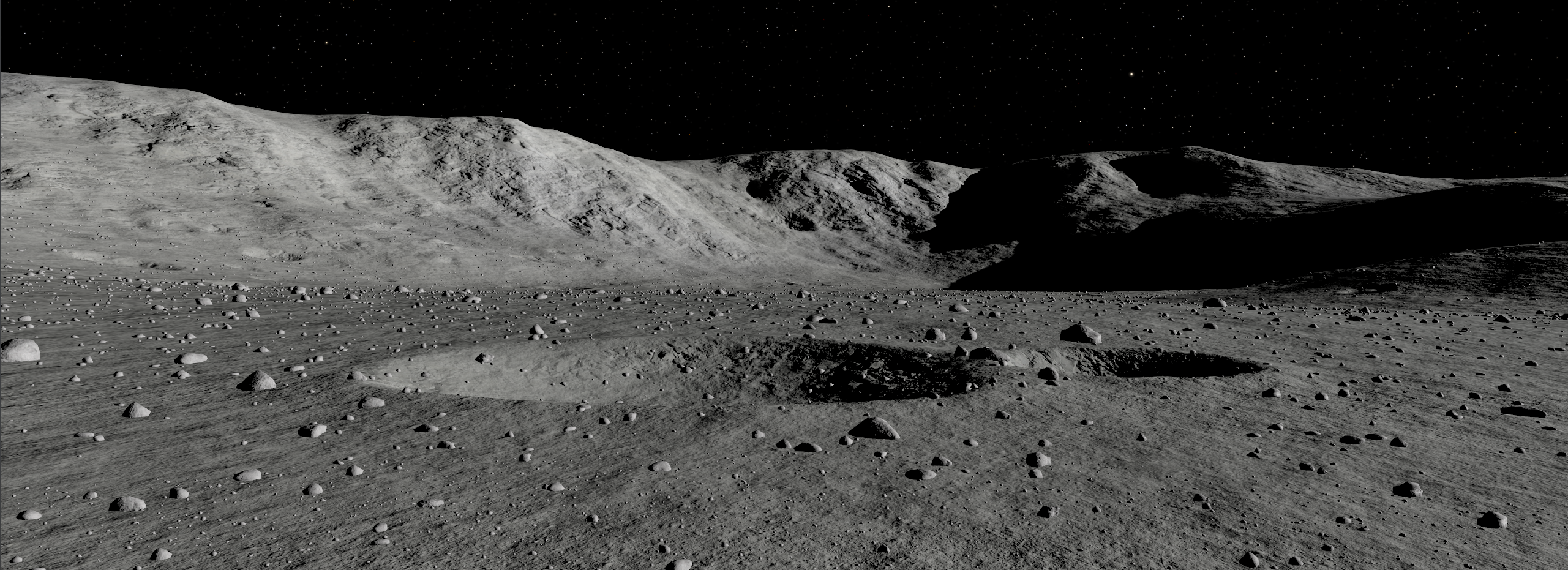

The environments you travel to should feel real and immersive. To achieve that, I'm working on an object generation system capable of populating our worlds with a variety of objects including rocks, trees, grass and anything else you might encounter on a planet's surface.

We need a way of generating and rendering a lot of objects with great fidelity as efficiently as possible to communicate these environments to the player best. This article will cover the main three stages involved with processing ground clutter on the surfaces of our planets without diving too far into the technicalities - generation, evaluation, and rendering.

Basics

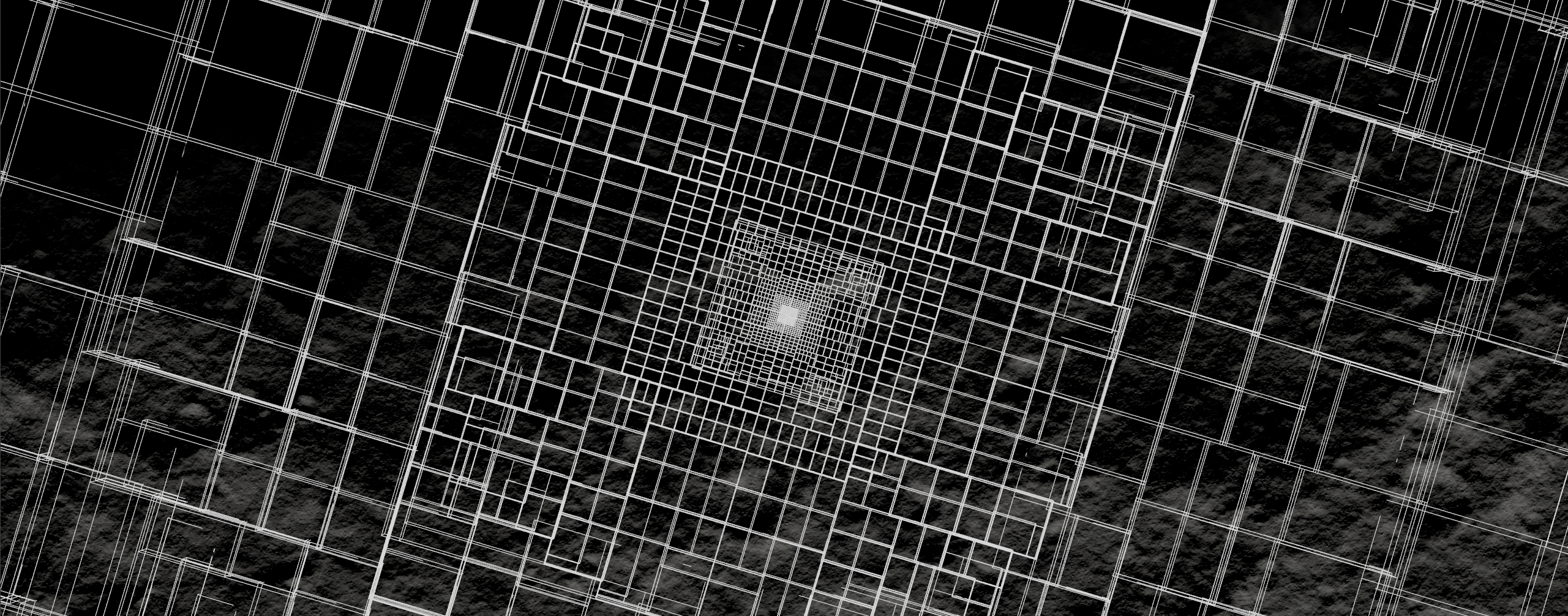

Ground clutter consists of groups of objects called 'ecotypes'. An ecotype describes a set of objects belonging to the same family, typically for classifying flora. In our case however, an ecotype may refer to a group of rocks, a type of grass or a species of tree. Each ecotype may contain a number of different meshes and materials, but they're generated following the same rules. Here's how ground clutter is currently organized conceptually and in XML:

Ecotype 1

Object 1

Material and LODs

Object 2

Material and LODs

Object N...

Ecotype N...

This setup allows some assumptions to be made about objects - they will be a similar size and separated by a similar distance on average. This maps perfectly to generating entire ecotypes at once in a chunk-style system:

Generation

We need to generate objects on-demand quickly, as spacecraft near the surface may be traveling at many kilometers per second. Right now the actual placement behaviour is bare-bones, however the performance cost is largely already included because we need to sample the terrain at each potential object location - this means building all the terrain modifiers and raycasting against the terrain mesh.

The above footage shows that object generation is lightning fast and stutter-free (any stutters you do see are from video playback!). To achieve this, the ground clutter system utilizes compute shaders, enabling the usage of the GPU's thousands of threads to do arbitrary work for us. In this case, we need to work out the following for each potential object:

The latitude/longitude this object should be placed at.

The height of the terrain at this location.

The exact position on the planet mesh this object needs to be placed at.

The rotation, scale, colour, and mesh that should be used to represent this object.

One we know all that, we have successfully generated an 'object' This is where the insane parallelism of the GPU can help us, and it is used throughout the entire ground clutter system.

But how do we know where exactly on the terrain mesh to put our objects?

KSA uses a technique called spherical billboarding for its terrain. Think of it as if the planet is an eyeball where the terrain becomes more detailed as you approach the pupil and the eye is always rotating so the pupil faces you. This poses a unique challenge for placing objects on the surface - the mesh can move!

The ground clutter system is completely separate from the terrain mesh, so for small objects we need a fast way of finding out the exact location on the surface to place them to prevent them from floating. Raytracing is perfect for this, and we use it in KSA to accelerate finding the surface position. For systems without raytracing we fall back to a software approach which is slightly slower but just as effective.

Evaluation

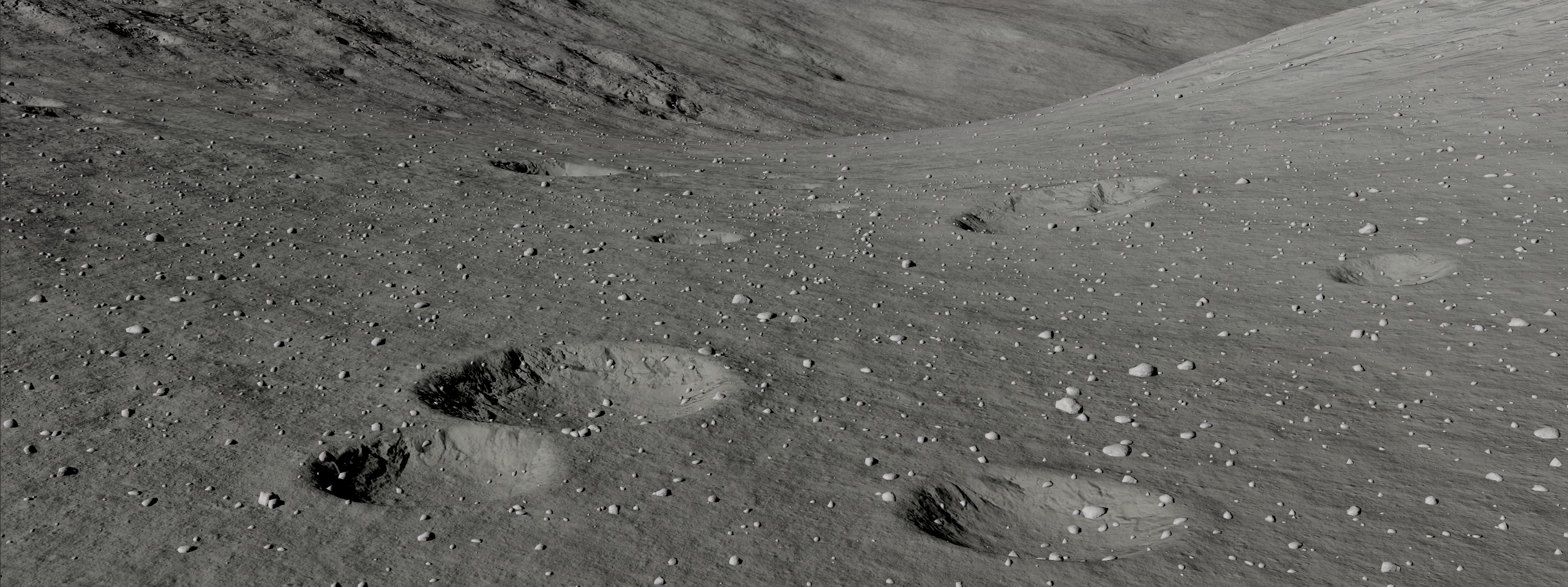

Now that objects can be represented at given positions on the planet, they need preparing for rendering. This means consulting the celestial coordinate frames to work out how to correctly transform the object data (position, rotation, scale) to the frame we need: ECL (Ecliptic), and then camera-relative.

We use compute shaders for this too - each thread is once again responsible for an object and runs this set of instructions:

Create the transformation matrix to take us from mesh space to ECL space.

Perform frustum culling.

Select LOD and manage LOD fading.

There are two extra steps after this which I'll gloss over for those interested. The first step reorders the data output by the evaluation stage into blocks [object type][LOD], the second generates draw commands referencing this reordered data. The reason we reorder the data is to group every object and every LOD into blocks so that in the second step we generate the lowest number of draw commands for instancing.

Rendering

What I've been describing so far is called a "GPU Driven Renderer" - aside from a small amount of data supplied by the CPU describing our surface objects, generation parameters and matrices, it largely takes a back seat beyond that. This method of rendering means we get to avoid a significant amount of synchronization between the CPU and GPU (which takes up valuable time!) and can focus on churning through the data quickly.

Now we have thousands of objects to render and need to draw them fast. The system so far maps perfectly to GPU Instancing which is a method of rendering all of these objects at once - rather than iterating through individual objects and saying "draw this mesh here", we say "draw N meshes using data from this buffer containing our object transforms". The way everything is set up means we only need to submit one draw command from the CPU to draw all ground clutter object types and LODs! When more rendering materials are supported, we will need to submit one per material instead.

Next Steps

You may have noticed that at the moment, ground clutter is placed indiscriminately. Next I'll be working on making placement more flexible and introducing parameters and textures to control it, as well as making ground clutter configurable per-biome. Multi-material support is also on the list but we don't yet have a way of creating the render resources for materials dynamically at runtime. Last but not least, collisions, but this is a long way away.

Thanks for reading!